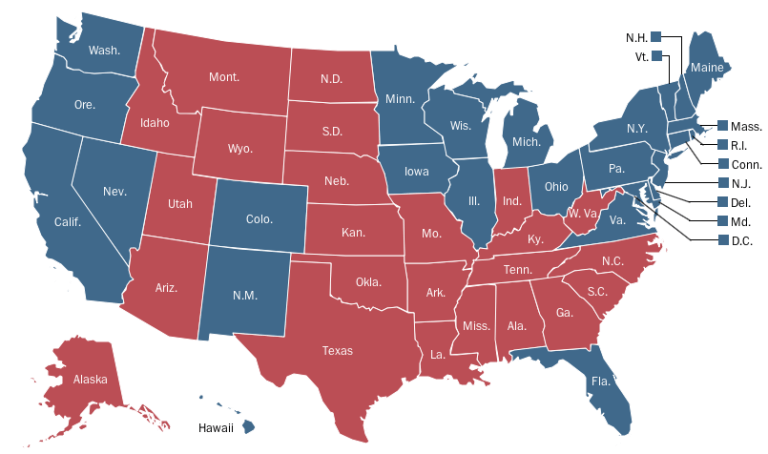

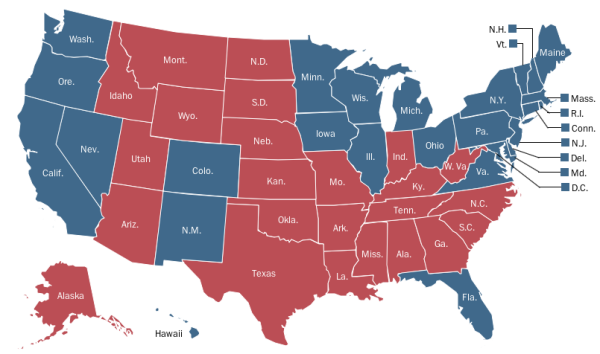

Nate Silver of the New York Times’ FiveThirtyEight election blog has become a celebrity after correctly predicting all 50 states and the District of Columbia in the 2012 presidential election.

More quietly, two Stanford professors – Simon Jackman and Drew Linzer – also got perfect scores. Jackman, professor of political science and Linzer, visiting associate professor at the Center on Democracy, Development and the Rule of Law, were deemed “master quants” by New York Magazine earlier this month.

Jackman’s poll-averaging model, Pollster, is published by the Huffington Post, and Linzer operates votamatic.org, a website detailing his electoral predictions.

Methodologies

Linzer, who established his website as a complement to his academic research, described his methodology as a two-stage process.

“I use a statistical model that relies on two sources of information,” he said. “In the summer time… I created what’s called a baseline forecast using ‘the fundamentals’: structural factors like how well the economy is doing, how popular the incumbent president is…and we put these things together over the last 15 elections or so.”

“From there, the second source of information is the state-level opinion polls,” he said. According to Linzer, more than 1,700 such polls were conducted in 2008, which amounted to over a million interviews. Although just 1,200 polls were conducted in 2012, they still provided statisticians with a wealth of data.

Jackman, who has been making predictions based on polling numbers since the 2000 presidential election, describes the process as conceptually basic but practically more nuanced.

“It’s pretty simple, really. If I’ve got two polls, can I put them together to extract the information in both to get a better estimate of what’s going on out there than any one poll alone?” he said. He then tracks these results over time.

However, other relationships also become significant for predictions.

“If you did a poll in Michigan last week, you probably know something about Ohio this week. There’s not only an ‘over time’ element to this but also an ‘over space’ element. Previous election results give you a pretty strong hint as to which states go together politically.”

Both Linzer and Jackman agree that their methodologies are similar and vary mostly in the fine print.

“[The disparities] are all minor differences and as you saw, they produce basically the same result,” Linzer said. “If you’re using the same evidence that ultimately predicts the right result, the modeling functions shouldn’t matter too much.”

“There’s not a lot between us that’s different,” Jackman said. “Drew’s model is very similar to mine. The conceptual elements I just described are the same ones in Drew’s model.”

Pundits proven wrong

‘‘Anybody that thinks that this race is anything but a tossup right now is such an ideologue, they should be kept away from typewriters, computers, laptops and microphones for the next 10 days, because they’re jokes,” declared Joe Scarborough on Oct. 23, responding Nate Silver’s prediction that the incumbent was heavily favored to take the presidency.

However quantitative analysts, or “quants” for short, such as Silver had been predicting the fairly comfortable win the president enjoyed for some time.

Linzer was measured in his discussion of media commentary, saying “what we saw was that those sources of information are just not as reliable or trustworthy.”

“Polling data, which is scientific, systematic, well-understood, and can be integrated in a statistical framework that can remove all of that potential for bias and personal idiosyncrasy… The proof is in the pudding,” he said. “The people that used hard data and knew how to handle it got it right.”

Jackman described the reason for the discrepancy between pundits’ predictions and his as a question of differing motivations.

“What those guys and gals on Fox, CNN and MSNBC [do] is really a different game… Part of me just thinks that their desire to be provocative to get on TV to push a provocative message, to engage in partisan rallying for their team, may overshadow what in their heart they may know to be the real state of affairs,” he said.

Both individuals expressed a hope for a change in the way political pundits air their opinions and the role they would play in subsequent elections.

“What I hope happens is that the commentary that is related to the types of work that Nate Silver, Simon Jackman or I do, where you’re talking about trends in public opinion, whether campaign events matter, who’s going to win… these are things that are [able to be studied] rigorously using quantitative data,” Linzer said. “Hopefully that sort of commentary will be turned over to people like us.”

“What this cycle really helped in doing was draw a line between what goes on on TV and what goes on when you take a serious, cold, dispassionate look at the data,” Jackman said.

“Having been shown to be wrong so systematically this cycle, the idea that [pundits] would ante up again in 2016 and go on a limb like that may be less likely,” he added.