Stanford researchers in the Computational Vision and Geometry Lab have designed an autonomously navigating robot prototype that they say understands implicit social conventions and human behavior. Named “Jackrabbot” after the swift but cautious jackrabbit, the visually intelligent and socially amicable robot is able to maneuver crowds and pedestrian spaces.

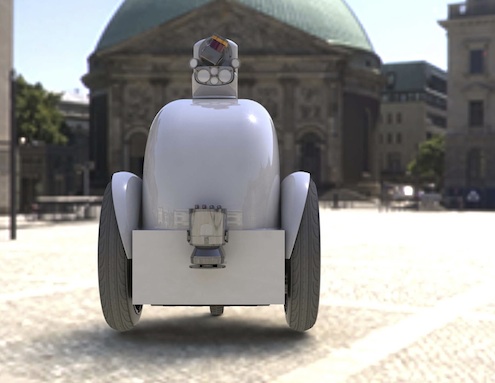

A white ball on wheels, the Jackrabbot is built on a Segway system and contains a computing unit and multiple sensors that acquire a 3-D structure of the surrounding environment. 360-degree cameras and GPS also enhance the robot’s navigation and detection capabilities.

To interact smoothly in public settings, the robot has to know how to avoid someone in a natural way, how to yield the right-of-way and how to leave personal space, among other skills.

“These are some of the rules we are aware of; there might even be rules we are not aware of,” said postdoctoral researcher Alexandre Alahi, who works on the Jackrabbot. “We wanted to see how we can learn all these [social conventions] with a machine learning approach and see if we can simulate them and predict them.”

The Jackrabbot research team, led by assistant professor of computer science Silvio Savarese, consists of Alahi, Chris Cruise M.S.’16, network administrator and IT manager Patrick Goebel, visiting lecturer Jerry Kaplan, Alexandre Robicquet M.S.’17 and Amir Sadeghian, Ph.D. candidate in electrical engineering. The researchers’ two focuses are producing the robot’s machine learning algorithm for social navigation and awareness and using the robot as a research tool to study the applications of artificial intelligence.

Alahi said the robot was inspired by self-driving cars and a desire to push artificial intelligence to less structured environments.

“For the self-driving car you can define a set of rules to encode the car to move around,” Alahi said. “We were wondering why we don’t have these robots in pedestrian-only areas, like on campus or airport terminals, to solve the problems of assisting people to move around.”

Currently the team uses the Jackrabbot — accompanied by two team members — as a delivery robot around Stanford’s School of Engineering, but researchers foresee many potential uses. Alahi and Savarese said that robots similar to the Jackrabbot could be used to more efficiently aid the elderly and the blind, deliver medicine in a hospital, patrol as a security guard or serve as a personal assistant.

The team spent two years working on the Jackrabbot, building the robot with the help of mobile robot company Stanley Innovation and implementing the algorithms predicting human behavior that permitted the Jackrabbot to move more safely in crowds.

Savarese said the team’s greatest challenge was developing the machine learning algorithm, which the team completed by gathering a large amount of human interaction data. Machine learning allows computers to learn from and make predictions on data without being explicitly programmed.

“We had no algorithm for social understanding,” Savarese said. “We have certain rules, but there are subtle cues that we don’t necessarily know.”

Robicquet notes that the Stanford mall robot that hit a toddler earlier this month is an example of how difficult it is to detect and predict the wide range of human movement. Alahi also said that the robots currently in use are often too cautious, waiting until all obstacles are out of sight before moving. But Alahi believes that in several years robots like the Jackrabbot will become as widespread as self-driving cars are on the path to everyday use.

The team intends to build new robots with improved Jackrabbot features: for example, a face to project friendliness through expressions and arms so that the robot can interact more with the environment.

Savarese said he finds the Jackrabbot and the realm of artificial intelligence particularly interesting because of robots’ potential for perception of sensory input and even emotions, just like humans.

“This level of understanding is something that always fascinated me, so how could we design a robot or computer that is able to interpret those sensory signals and understand the thematics and high-level concepts?” Savarese said. “That has been what my career has been about: turning blind sensory signals into something that has meaning.”

Contact Caitlin Ju at ju.caitlin ‘at’ gmail.com.