Stanford University had a notable presence at the 37th International Conference on Machine Learning (ICML) last week, with a leading number of citations and papers, ahead of other machine learning powerhouses MIT, U.C. Berkeley, Carnegie Mellon and Princeton.

The ICML 2020, which was held from July 12 to July 18, was originally scheduled to take place in Vienna, Austria, but due to the COVID-19 pandemic, the conference was moved to a virtual format.

The ICML is a leading academic conference on machine learning, at which academic and industry teams from around the world present cutting-edge research in the field. This year, only 1,088 papers were selected from 4,990 submissions, an all-time low acceptance of 21.8%, according to an article published on Medium by Gleb Chuvpilo, a partner of the firm Thundermark Capital, which invests in AI and robotics.

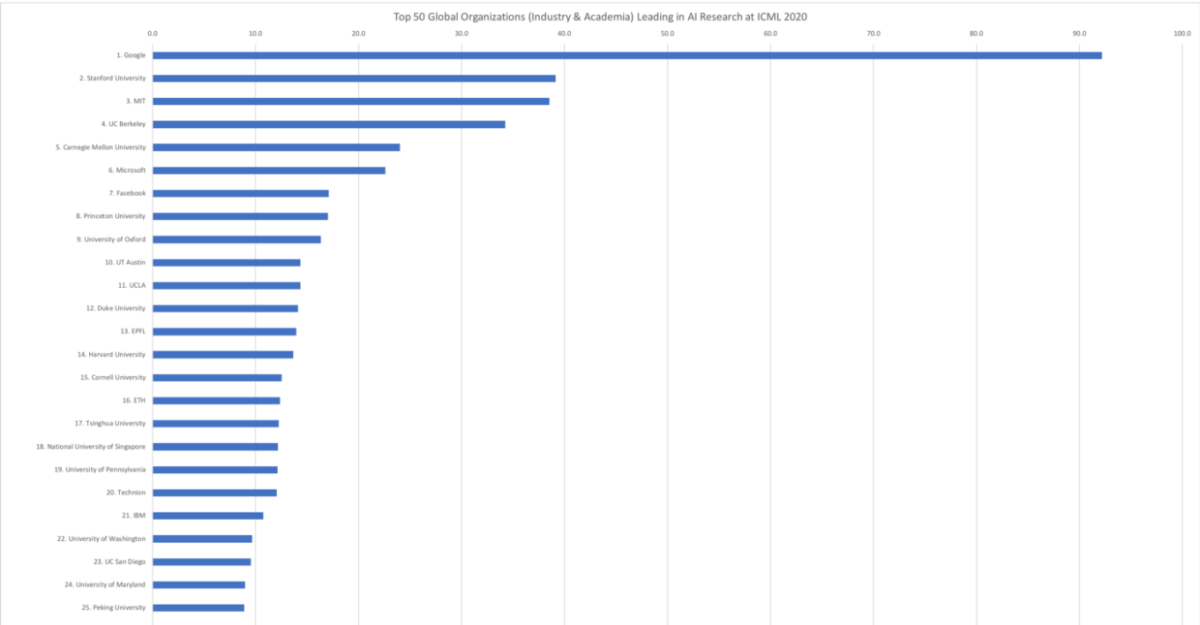

Stanford also proved itself to be a leader in Artificial Intelligence research relative to industry representatives, second only to Google, and ahead of leading companies like Microsoft, Facebook and IBM. This year, the Stanford Artificial Intelligence Laboratory (SAIL) presented 40 papers, in addition to the other papers in which Stanford faculty and researchers were authors or contributors.

Stanford’s Dr. Or Litany, a postdoctoral research fellow in the computer science department, was a member of a multinational team whose paper won one of the conference’s two Outstanding Paper Awards. Litany is a member of the Geometric Computation Group at Stanford, headed by computer science and electrical engineering professor Leonidas Guibas ’76. Litany’s paper, entitled “On Learning Sets of Symmetric Elements,” was co-authored by Haggai Maron, a research scientist at the company NVIDIA, and Drs. Ethan Fetaya and Gal Chechik of Bar Ilan University. Chechik was also a postdoctoral researcher in Stanford’s computer science department, and is now Director of Artificial Intelligence at NVIDIA.

While Litany’s research background is in 3D deep learning, this particular paper focuses on determining “what neural network architectures should be used when learning across sets of complex objects.” Deep learning is a branch of machine learning where the main tool that is being used is an artificial neural network, Litany said, and the main concept of machine learning is that “instead of prescribing a solution to a problem by hand, we can teach a computer to solve it by showing it examples.”

Litany’s work presents a model which “achieves superior results in a range of problems,” and has applications in machine selection and image enhancement including deblurring for 2D image bursts, as well as multiview reconstruction for 3D images. An example of this could include taking sets of images of furniture for Facebook Marketplace and digitally reconstructing the furniture in order to view it from all angles. Litany emphasized that the machine learning model developed in his work “is not something unique for images, graphs or sound signals; anyone working on deep learning applications should consider this type of structure.”

The ICML 2020 Honorable Mention Award was also won by a team with Stanford roots, as one of its members is Dr. Ilya Sutskever, former postdoctoral student at Stanford and now the chief scientist at OpenAI. Their paper, Generative Pretraining from Pixels, examines if modeling can regressively predict pixels in images.

Participation at the ICML is valued for a number of reasons, including the opportunity to have work reviewed by an esteemed board, the exposure to groundbreaking work being presented by other international teams of research scientists and the opportunities for connection and collaboration across institutions. According to Annie Marsden, a Ph.D. candidate in Stanford’s computer science department who focuses on machine learning, “computer science is really collaborative across universities, and participation at conferences like ICML helps further that collaboration because it helps us stay aware of what researchers at other institutions are doing.”

“It keeps you aware of what the whole world is up to,” she said.

Contact Jack Murawczyk at dmurawczyk23 ‘at’ csus.org.