Stanford artificial intelligence (AI) researchers terminated their Alpaca chatbot demo on March 21, citing “hosting costs and the inadequacies of our content filters” in the large language model’s (LLM) behavior less than a week after its initial release, although the source code remains publicly available. Despite the defunct demo, the researchers found Alpaca to have “very similar performance” to OpenAI’s GPT-3.5 model, according to the team’s initial announcement.

In one month alone, the world has seen the release of GPT-4, the Midjourney v5 image generator and Google’s Bard chatbot. Additionally, NVIDIA, one of the largest AI hardware companies by market share, came out with an expansion of computing services for developing and deploying AI models.

The research team worked on Alpaca at the Stanford Center for Research on Foundation Models (CRFM) and includes five Ph.D. students and three faculty members.

“Alpaca is intended only for academic research,” according to the announcement. Given additional safety features yet to be implemented, the model will not be rolled out for general use in the foreseeable future.

“We think the interesting work is in developing methods on top of Alpaca (since the dataset itself is just a combination of known ideas), so we don’t have current plans along the lines of making more datasets of the same kind or scaling up the model,” wrote assistant professor and Alpaca researcher Tatsunori Hashimoto of the Computer Science Department, in a statement to The Daily.

Alpaca is based on Meta AI’s LLaMA 7B model, with a namesake seven billion parameters. The researchers at CRFM generated training data for LLaMA using a method known as “self-instruct,” gathering 52,000 question-answering examples from OpenAI’s text-davinci-003 (colloquially known as GPT-3.5).

“As soon as the LLaMA model came out, the race was on,” said adjunct professor Douwe Kiela. Kiela, who is part of the Symbolic Systems Department at Stanford, previously worked as an AI researcher at Facebook and as head of research at the AI company Hugging Face.

“Somebody was going to be the first to instruction-finetune the model, and so the Alpaca team was the first … and that’s one of the reasons it kind of went viral,” Kiela said. “It’s a really, really cool, simple idea, and they executed really well.”

Meta AI released the LLaMA model’s weights earlier this year under a “noncommercial license focused on research use cases,” according to its announcement. “Access to the model will be granted on a case-by-case basis to academic researchers; those affiliated with organizations in government, civil society, and academia; and industry research laboratories around the world.”

Weights, also known as a model’s parameters, are numerical values describing the strength of connections between different parts of a neural network. These values are essential in approximating and digitally storing the patterns and statistical relationships that arise in the large datasets on which LLMs are trained.

The researchers compared Alpaca’s outputs for specific prompts to outputs that GPT-3.5 produced for the same prompt. Over time, Alpaca’s behavior converged to something akin to GPT-3.5. Hashimoto said that the LLaMA base model is “trained to predict the next word on internet data” and that instruction-finetuning “modifies the model to prefer completions that follow instructions over those that do not.”

Generally, the training process involves running vector representations of examples from some dataset through the layers of a neural network, and for each example encountered, a prediction comes out the other end. To the model-in-training, the quality of an individual prediction is framed in comparison to a “label” or ideal output, which is commonly provided by human annotators. Depending on how close or far the prediction is from the label, the model readjusts its parameters to improve its predictions for future inputs, as if twisting the pegs of a violin to get just the right sounds from its strings.

OpenAI’s terms of use prohibit using “output from the Services to develop models that compete with OpenAI,” which the researchers referenced in their announcement.

Restricting the commercialization of emulating outputs from OpenAI’s technology is “a legal prospect that’s never been tested,” according to computer scientist Eliezer Yudkowsky, who researches AI alignment.

I don't think people realize what a big deal it is that Stanford retrained a LLaMA model, into an instruction-following form, by **cheaply** fine-tuning it on inputs and outputs **from text-davinci-003**.

— Eliezer Yudkowsky (@ESYudkowsky) March 14, 2023

It means: If you allow any sufficiently wide-ranging access to your AI…

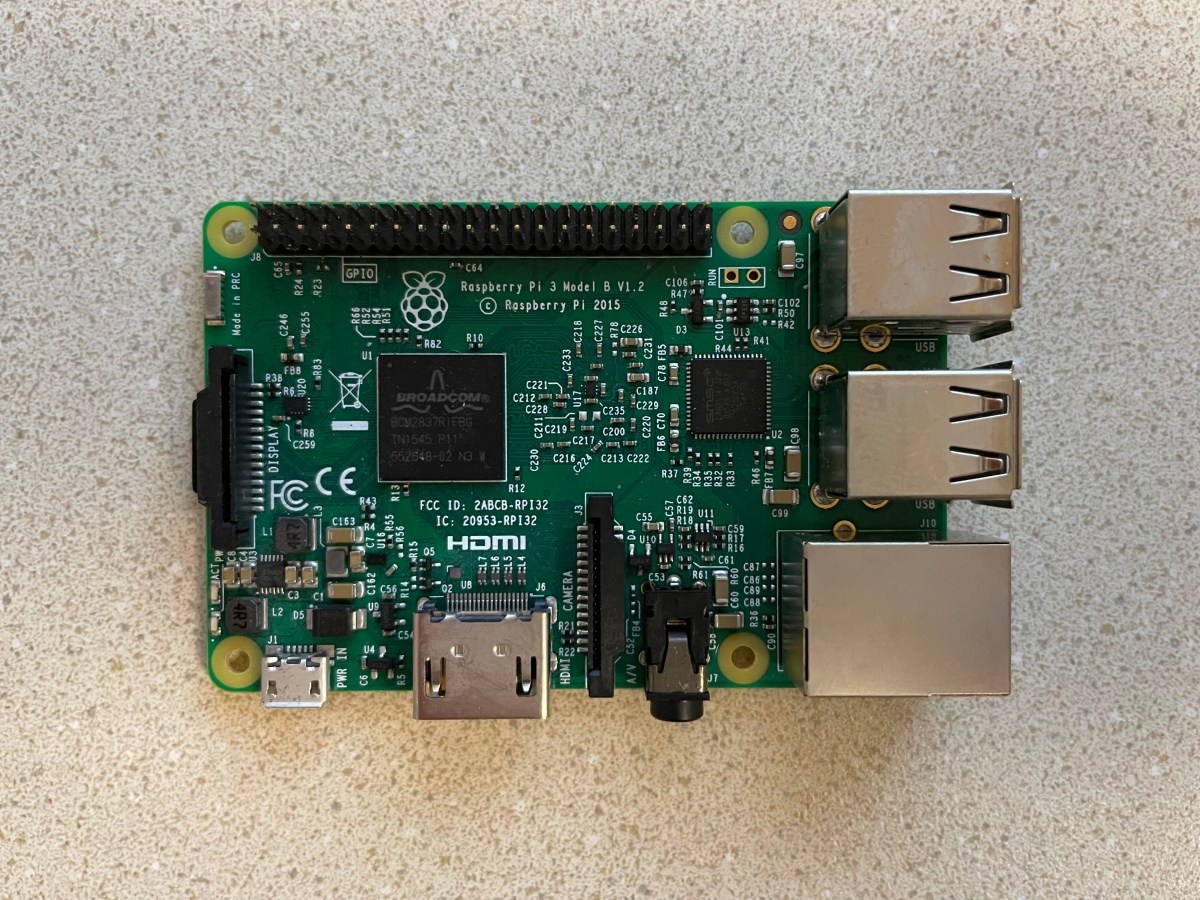

Alpaca’s source code remains public and continues to draw attention. The repository has been starred on GitHub more than 17,500 times, and more than 2,400 users have “forked” the code, creating their own iteration of the model. Last week, Eric J. Wang ’19 M.S. ’20 published a spinoff of CRFM’s model called Alpaca-LoRA on GitHub. A live demo of the pre-trained model is currently available on the Hugging Face website. It is also capable of running on a Raspberry Pi 4 Model B using 4 GB of random-access memory (RAM), according to Alpaca-LoRA’s GitHub page.

The Daily has reached out to Wang for comment.

While Alpaca took less than two months to complete at CRFM and was realized with less than $600, Hashimoto said that he does not see the research as changing dynamics between companies that have economic incentives related to LLMs. “I think much of the observed performance of Alpaca comes from LLaMA, and so the base language model is still a key bottleneck,” Hashimoto wrote.

With numerous AI systems increasingly integrated into everyday life, there’s a growing question in academic circles over when to publish source code, and how transparent training data and methods of companies ought to be, according to Kiela.

“I think one of the safest ways to move forward with this technology is to make sure that it is not in too few hands,” he said.

“We need to have places like Stanford, doing cutting-edge research on these large language models in the open. So I thought it was very encouraging that Stanford is still actually one of the big players in this large language model space.”