A researcher warned against the use of artificial intelligence and predictive policing by the criminal justice system, referencing biased data sets, at a talk on Monday.

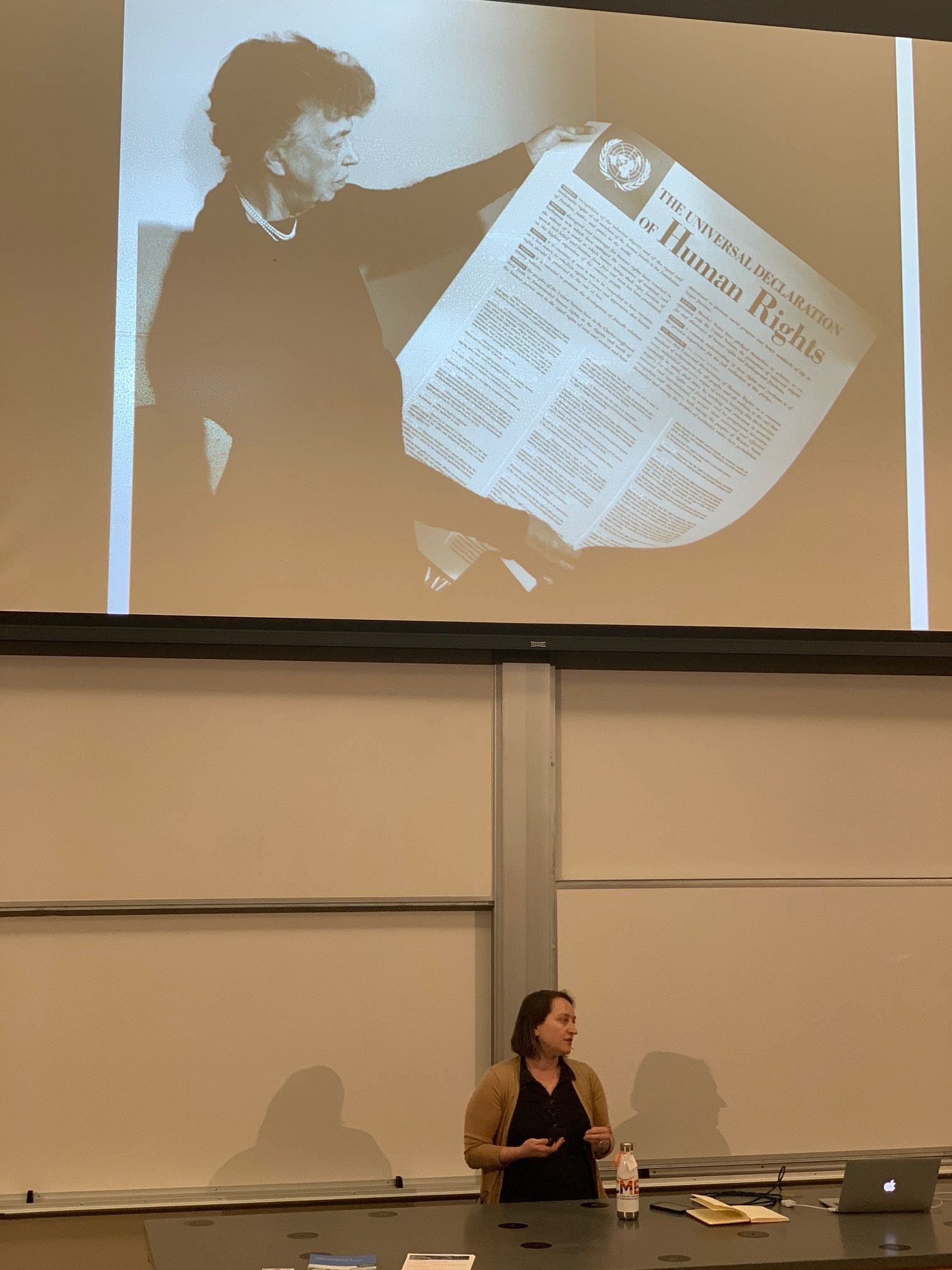

Megan Price, executive director of the San Francisco-based nonprofit Human Rights Data Analysis Group (HRDAG), spoke with students in the seminar series “AI for Good” about varying ways to use artificial intelligence (AI) when fighting for human rights.

She singled out predictive policing, which utilizes technology to predict where crimes are likely to happen and deploys resources accordingly, as a potentially harmful use of AI.

Price said the data used for this type of law enforcement is “biased” and “incomplete” because it is based solely on crimes the police know about. This data tells police departments to keep investigating neighborhoods with high crime rates, but it doesn’t necessarily tell them anything about the actual crime.

In order to obtain unbiased arrest data, Price said law enforcement must know about every crime that has ever been committed in a specific region.

“That sounds like more surveillance; that might not be better,” she said.

Price acknowledged that models used for AI algorithms are reductionist in nature and will “make mistakes.” She encouraged students to consider the “potential missing data and biases” in models such as predictive policing and the costs of these mistakes and biases.

“The cost that is borne when the predictive policing model makes a mistake is by community members,” she said. “And it’s the marginalized and the vulnerable members of our community who have been over-policed.”

Price compared predictive policing to the use of AI in the effort to discover hidden graves in Mexico. Drug cartel-related violence has led to thousands of deaths throughout the country. In September 2019, Mexican government officials announced they had discovered over 3,000 hidden graves and nearly 5,000 bodies since 2006. Price and her team work with Mexican investigators to use simple algorithms to predict with surprising accuracy where graves might be hidden.

Comparing the two uses of AI, Price urged the audience to weigh the costs and benefits of both applications.

“When we get it wrong in Mexico, we’re inefficiently using our resources,” she said. “But we would argue in the case of Mexico, that the use of this tool to predict places to conduct investigations is an improvement over the status quo. And we would argue that in the case of predictive policing it is not.”

HRDAG is made up of data scientists, statisticians, computer scientists and political scientists. They work with partner organizations to strengthen human rights advocacy efforts by providing technical expertise.

Price explained that data science “doesn’t necessarily need to be front and center,” calling it a “technical appendix.”

“But it has to be right,” she said. “In human rights work we talk a lot about speaking truth to power. And if that’s what you’re doing, then you have to know that your truth can stand up to what we think of as adversarial environments.”

Price and HRDAG believe they have a “moral obligation to do the absolute best work that is technically possible,” Price said. “And that’s what drives all the projects that we take on, because we believe that we have that obligation to pay due respect to the victims and the witnesses who are sharing their story with us.”

In human rights advocacy, Price and her team almost never work with data that is representative or complete.

“It’s all what people were able to document during one of the worst periods of their lives,” Price said. “It’s still very valuable. But our job as statisticians or as data scientists is to analyze that data appropriately, given that it’s incomplete and not representative.”

HRDAG has worked with nations across the globe to prove human rights abuses took place. Price explained that the group is accustomed to working in spaces where people want to oppose truth and facts, referring to the denial as “a common tool of perpetrators of violence and violators of human rights.”

Recently, representatives from the organization have testified in trials prosecuting the former president of Serbia as well as the former president of Chad. Both leaders were accused of human rights abuses, which they deny took place. HRDAG was able to use data science to prove that these atrocities did in fact take place during their times in office.

Price believes that there are many opportunities for artificial intelligence to aid in human rights advocacy efforts. However, she says these models are not magic.

“They’re just like anything else,” she said. “They’re another tool in our toolkit, and it needs to be subjected to the same kind of evaluation, and the same kind of questions about positive and negative outcomes.”

Contact Emma Talley at emmat332 ‘at’ stanford.edu